Live Link Face Reviews

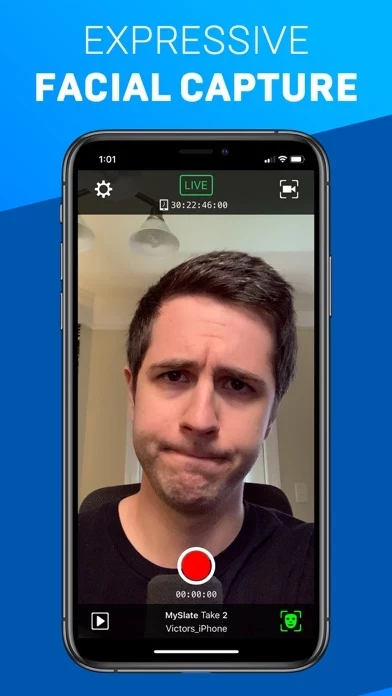

Published by Unreal Engine on 2025-02-17🏷️ About: Live Link Face for effortless facial animation in Unreal Engine — Capture performances for MetaHuman Animator to achieve the highest fidelity results or stream facial animation in real time from your iPhone or iPad for live performances. Capture facial performances for MetaHuman Animator: - MetaHuman Animator uses Live Link Face to capture performances on iPhone then applies its own processing to create high-fidelity facia.